Table of Contents

In this article we are going to explore the process of writing prompts for your agents. My objective is to highlight some strategies that you can implement in your projects by providing you with a practical example.

The example I'm offering you to explore is the generation part of a RAG. Many companies nowadays explore the possibility of interacting with their documentation base using LLM models. This means some kind of generation agent should be created at some point.

Description of the project

Meet HowDoI, an application that generates tutorials on internal company processes based on the documentation available within the company. Since we want to focus only on the generation of the tutorials, we will assume that the documentation has already been normalized and embedded in a vectorial database, and that the mechanism used to retrieve relevant documents has already been created. Thanks to this hard work we have a very clean input for our generation pipeline.

The input:

The input for our generation pipeline is a list of documents that are all relevant to a end-user prompt.

The required output:

The output that we wish to obtain is a tutorial on how to setup or use an internal tool based on the documentation of that tool available in the company.

Pretty vague ? Yeah, that's on purpose. Your manager will probably be even worse at explaining what he wants your agent to do.

Now that we have described what we want our agent to do, let's have a little refresher at what a prompt is.

The structure of a prompt

When using LLMs programmatically, a prompt usually contain 3 parts. Two of which you will very often interact with.

- The system prompt:

The system prompt allows to set the global personality and behaviour of an LLM agent. This tells ithowto answer. - The user prompt:

The user prompt represents the input from the end user. It contains the task to be executed or the question to be answered. This tells the LLM agentwhatit needs to do. - The context:

The context is the memory of the LLM agent. It's all the messages (both system and user) that have been sent in the page as well as the answers it has already provided. The amount of tokens (or words) that an LLM is capable of storing in its context varies on the model you are using.

While you can manually manage the context, you will probably only very rarely touch it.

If you wish to dig a little deeper into prompt engineering and structure, here are some documentation pages from OpenAI:

Writing the prompt

Step 1 - Understanding what we want to achieve

Before trying to write a prompt blindly it is always a good idea to understand what it is that we are trying to achieve. Let's start with a question outside the realm of development: what is a tutorial ?

According to Diataxis there are four different types of documentation.

- Tutorials – Learning-oriented: A step-by-step process to help the user gain new skills (good for beginners)

- How-to guides – Goal-oriented: Specific, focused, and practical steps to achieve a goal

- Explanations – Understanding-oriented: Provide context and conceptual information

- References – Information-oriented: Technical descriptions and facts

A tutorial is an experience that takes place under the guidance of a tutor. A tutorial is always learning-oriented.

Unfortunately, our users will probably not have a tutor available to rely on for their tutorial. For this reason, let's simplify: let's make a How-to guide.

Step 2 - Coming up with an internal agentic workflow

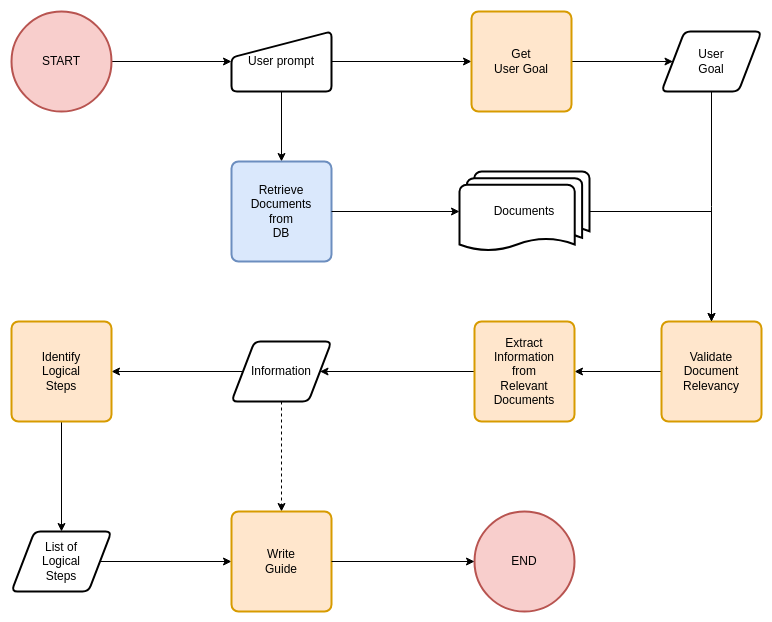

The second step is to mentally abstract the workflow that the agent will have to perform in order to generate a How-to guide. A simple way to do this is to ask yourself the question: "How would I, as a human, go about performing the task?". Personally, it would look something like this:

- Read the user's prompt and figure out what he wants.

- Read the list of documents returned by the vectorial database and understand the information inside.

- Figure out if it is possible to achieve what the user wants with the given information. If it is possible, figure out the logical chain of steps to do so.

- Write a guide that formats the logicals steps in the desired layout.

Once you have this, you can translate it in machine word:

- parse the end-user's prompt and understand the user's goal

- parse the documents provided as input.

- validate the relevancy in regards to the user's goal

- extract the relevant information

- identify the logical steps required to achieve the user's goal using the information extracted

- write a guide that formats the logical steps in a certain way

Reading this flow should allow you to identify connections between the different steps of the process and create a mental map of what you'll need to do.

Identify weaknesses early

It is always best to identify the weaknesses in our design early. In this article, I will not address all of them, but I want you to be aware that they are there.

- Reasoning process: if not provided, the agent will have to decide

howhe is going to achieve step 3 (identifying the logical steps) - Data exactness: if the agent does not have any tool for interacting with the user, should the user write a prompt that is unclear or should the data in the documents be incomplete, the agent will have to guess - this can lead to hallucinations

- Self-validation: if not provided, the agent will not actually verify that the output helps the user achieve his goal

Step 3 - Identifying prompting strategies

While this article is not an in-depth explanation on prompting, I'm writing on the assumption that you know what the following terms mean:zero-shot, one-shot, few-shot Chain of Thought (or CoT), ReAct

We have shown that our reasoning task, while it may look simple on the surface, is composed of multiple steps and include, interpretation, retrieval, planning and formatting. Because the steps are so different from each-other, a single monolithic agent has high chances of hallucinating or omitting information if we were to use zero-shot or one-shot prompting.

Using CoT will certainly reduce the hallucinations since it forces the LLM to explain the logical steps of its generation process. This should allow the model to notice if something is not right before providing a final response.CoT is especially useful in this project since we have a clear multi-step workflow.

So what about ReAct prompting ? This could give the model the ability to self-reflect and re-iterate its generation based on its observations.

However, even though we have identified self-validation as a potential weakness, ReAct is less effective without providing it any tools. This means we should provide it the tools required to actually validating the output it would generate.

While it would be possible to implement a ReAct workflow, I believe that it would be out of scope for the topic of this article, so let's keep it for another time.

Step 4 - Writing the prompt

Version 1

system_prompt = """

You are a documentation agent that creates structured How-To guides based on documents and user goals.

A How-To guide is:

- Goal-driven: it helps users achieve a specific task or solve a practical problem.

- Action-oriented: it provides direct, step-by-step instructions.

- Context-light: it assumes the user already knows the basics and just needs guidance.

Your task is to think step-by-step using the following reasoning chain:

1. Identify the goal the user wants to achieve.

2. Scan the documents for relevant tools, commands, or workflows related to the goal.

3. Break the goal into clear, actionable steps.

4. List the prerequisites needed to perform these steps.

5. Draft a structured internal outline with:

- Title

- Problem/Goal Statement

- Prerequisites

- Step-by-step Instructions (numbered)

- Expected Result or Verification

Do not include conceptual explanations or references unless strictly necessary for the task.

Stay focused on practicality and clarity.

"""

user_prompt = """

Given the following user goal and list of documents, generate a structured How-To guide outline.

Think step-by-step:

1. Understand the goal.

2. Identify relevant tools, commands, and methods from the summaries.

3. Plan out each step needed to achieve the goal.

4. Format the result as an internal outline with the following structure:

- Title

- Goal/Problem Statement

- Prerequisites

- Numbered Steps

- Expected Result

=== Query START ===

[USER-QUERY]

=== Query END ===

=== List of Documents START ===

[DOCUMENT-LIST]

=== List of Documents END ===

"""In this version we have applied the following strategies:

- The system query contains the definition of a How-To guide to prevent the model from guessing.

- We are using

Chain of Thoughtto reduce hallucinations. - We specified the reasoning process by listing specific steps. This prevents the model from guessing.

However, while CoT prompting does reduce hallucinations, it does not remove them entirely. This is because our workflow is complex and requires a cognitively dense context. For this reason we want to think of a different approach.

Version 2

Instead of depending on a single agent that does the entire workflow, we want to split the work between multiple agents. Each agent in the multi-agent workflow will be responsible for a single reasoning step. This makes the process of each agent less complex and also allows for:

- better error isolation (= easier debugging)

- better modularity (= allows extending the workflow in the future)

- parallelization of agent (= processing multiple documents concurrently)

Identification of agents

Since we are going to split up our generation agent, we need to identify which task will be be converted to an independent agent. For our project we will keep it simple and follow the structure of our workflow:

GoalExtractorAgent: Parses the end-user prompt and formats it into a clear user goalDocumentValidationAgent: Ensures that the document is relevant to the user goal.ContextMapperAgent: Analyses the documents and extracts the relevant tools, workflows, commandsStepPlannerAgent: Generates a draft of logical steps based on the user goal and the available context (tools, workflows, commands)DocumentationFormatterAgent: Structures the document into a well-written How-to guide

Since we followed the structure of our workflow, notice how the orange process boxes in our workflow diagram are represented 1 to 1 by an agent.

GoalExtractorAgent

system_prompt = """

You are a goal extraction agent in a documentation pipeline.

Your role is to extract a clear, specific goal from a natural language query. You should:

- Focus only on what the user wants to achieve.

- Avoid assumptions not grounded in the input.

- Output the result in a single sentence or phrase.

- Do not provide explanations or steps — only the goal.

"""

user_prompt = """

Extract the core user goal from the following query.

Think step by step:

1. Read the query carefully.

2. Identify the task or outcome the user wants to accomplish.

3. Rephrase the intent as a concise, actionable goal.

=== Query START ===

[USER-QUERY]

=== Query END ===

Output:

- Goal: <one sentence>

"""DocumentValidationAgent

system_prompt = """

You are an AI assistant that determines whether a document contains clear,

non-trivial information related to a specific user-defined topic.

Think step by step before responding. Evaluate whether the document includes

any specific concepts, facts, or descriptions that directly relate to the

topic.

Do not summarize the document or explain your decision.

Only return:

- "Yes" — if the document clearly contains meaningful, topic-relevant

information.

- "No" — if it does not.

Be strict and conservative in your evaluation.

"""

user_prompt = """

My goal is: [USER-GOAL]

Evaluate whether the following document contains clear and directly

relevant information related to this goal. Focus on whether the document

discusses specific facts, ideas, or content that are non-trivially related.

Respond only with "Yes" or "No" — do not provide reasoning or explanations.

=== Document Start ===

[DOCUMENT]

=== Document End ===

"""ContextMapperAgent

system_prompt = """

You are a context-mapping agent in a documentation generation pipeline.

Your role is to extract all relevant tools, commands, scripts, and workflows from documents that help accomplish a specific user goal. You should:

- Read only the input summaries and goal.

- Extract and organize information related to the goal.

- Avoid assumptions or adding unrelated concepts.

- Output the result in a structured JSON format.

"""

user_prompt = """

Based on the user goal and the provided documents, extract relevant content.

Think step by step:

1. Read the goal and understand the outcome the user wants.

2. Go through each document and look for tools, commands, scripts, or workflows that support that outcome.

3. Group the findings into categories: tools, commands, scripts, workflows.

=== Goal Start ===

[USER-GOAL]

=== Goal End ===

=== Documents Start ===

[DOCUMENT-LIST]

=== Documents End ===

Output format:

{

"tools": [...],

"commands": [...],

"scripts": [...],

"workflows": [...]

}

"""Notice how the Output format has been explicitly defined. This means that we now know the input for the next agent.

StepPlannerAgent

system_prompt = """

You are a step-planning agent in a documentation pipeline.

Your role is to take a user goal and a set of relevant tools and workflows, and convert them into practical, logically ordered steps.

You must:

- Write actionable, goal-oriented instructions.

- Use only the tools and commands provided.

- Include prerequisites needed before the steps can be followed.

- Ensure the instructions are complete and logically ordered.

"""

user_prompt = """

Generate a step-by-step guide to help a user achieve the goal using the tools and workflows provided.

Think step by step:

1. Read the goal to understand the end outcome.

2. Identify what the user must prepare (prerequisites).

3. Plan each step logically using only the tools and workflows available.

4. Sequence the steps clearly.

5. Conclude with how the user will know they succeeded.

=== Goal Start ===

[USER-GOAL]

=== Goal End ===

=== Context start ===

[CONTEXT-MAPPER-AGENT-OUTPUT]

=== Context end ===

"""DocumentationFormatterAgent

We do not need to set CoT for this agent as it is a deterministic process.

system_prompt = """

You are a formatting agent in a documentation generation system.

Your role is to take prewritten guide components and format them into a structured how-to outline.

You must:

- Preserve all provided content.

- Use a consistent, Markdown structure.

- Do not rewrite, add, or remove steps.

- Focus only on layout and presentation.

"""

user_prompt = """

Format the following how-to guide steps content into a publishable documentation outline.

=== Steps Start ===

[STEP-PLANNER-AGENT-OUTPUT]

=== Steps End ===

Output:

A formatted Markdown how-to guide outline.

"""We could improve this prompt by being explicit about the output that we want to have. For example we want:

- a title

- an introduction paragraph

- a list of followable steps

- a conclusion

Conclusion

We have described the process of creation of prompts for a concrete example project. Obviously, those prompts will not be fit as is for your project, but I hope that you have gotten something out of the thought process behind the creation of the prompts.

This article shows that there is more into writing a good prompt than meets the eye. I also hope that this article finds stakeholders to raise awareness about the limitations of LLM programming and the amount of work that goes into building a solid workflow.